Diacritical Marks in Unicode

I won’t bury the lede, by the end of this article you should be able to write your name in crazy diacritics like this: Ḡ͓̟̟r̬e̱̬͔͑g̰ͮ̃͛ ̇̅T̆a̐̑͢ṫ̀ǔ̓͟m̮̩̠̟. This article is part of the Unicode and i18n series motivated by my work with internationalization in Firefox and the Unicode ICU4X sub-committee.

Unicode is made up of a variety of code points that can represent many things beyond just a simple letter. The code point itself is a numeric value represented by the hex value (a base 16 number), and by convention starting with a U+. Let’s start with examining the code points of some Latin letters that are common to see in English text. The input below will update when changed and show the information about each code point. Refresh the page to reset the input.

In the table above, the “Unicode block” is an area of contiguous code points that are organized around some theme. It’s a useful way to look up similar characters. I typically use them as a search term and look up the Wikipedia page or some other websites collection of code points.

Other languages and accent marks

At this point, the idea that a Unicode code point maps to a letter is a pretty easy abstraction to hold in our head. With the Latin letters used in English, we don’t need to worry about accents or anything that is funky (at least from a native English speaker’s perspective).

Well, it’s time to get funky with some Spanish. In the Spanish language, accents are used to denote the intonation of a given word. There is also the ñ symbol which is a different way of pronouncing the n. In this case it’s pronounced like “nya”. Both of these marks add additional information to the letter. These marks are known generically as “diacritics” or “diacritical marks”.

Scaling up the use of diacritical marks

Using more and more code points to support combinations of Latin letters scales only up to a certain point. Different languages have different support for diacritical marks, and they may not always combine against the Latin character set.

Early ASCII encoding had no support for these types of marks. In 1985 a new encoding standard was released commonly referred to as Latin 1 encoding, but officially as ISO/IEC 8859-1. The ¿Cómo estás? phrase listed above in the Unicode encoding is fully backwards compatible for the code points for Latin 1.

Ok, now we were only a little “funky” with Spanish, but let’s have some more fun with a tonal language–Vietnamese (which I do not speak.) English does not encode tone information into any part of the written language. It’s what you call a monotonic language. However, in tonal languages, the relative pitch of the words change the actual meanings of what is spoken. These languages are known as polytonic.

I found this wonderful article explaining Vietnamese typography and tone marks. To summarize the article, the tone marks affect the pitch of what is spoken. For instance adding a hook above ả ẻ ỉ ỏ ủ ỷ denotes a mid-low dropping pitch. An underdot ạ ẹ ị ọ ụ ỵ denotes a low dropping pitch. These are all the same vowels, but with different pitches being used.

The combinatorial logic here is getting quite large to handle, but Unicode still provides the codepoints for handling all of these diacritical marks. Either the marks existed in the original code blocks, or they were added to various supplemental Latin blocks. Here you can see many of them represented in the codeblock “Latin Extended Additional”.

Another approach

To generalize the use of diacritics across languages, Unicode provides a mechanism known as “combining diacritical marks”. Here multiple code points are required to form one character. To use technical terminology, multiple code points that combine into a single group is known as a grapheme cluster. Let’s explore the phrase from above. In this new encoding scheme, the diacritical marks follow the Latin letter as a separate code point.

Note that in the tool that I wrote to display code points, whenever there is a combining mark, the tool applies it to the ◌ dotted circle character to make it clear what is going on.

My favorite place to start experimenting with these combining marks is to explore the different marks available in the Combining Diacritical Marks code block.

How this affects your code

If you were to do a byte by byte comparison, or a code point to code point comparison of these two strings they would not be equal, but the displayed text is equivalent. It is perfectly valid to represent this text with both code point representations.

So how does this affect doing comparisons in code?

Let’s examine JavaScript’s behavior to get an idea. Feel free to open the web console and try it out.

const nonCombining = "Bạn khỏe không?";

// You can put codepoints directly into a string by using "\u" then

// the hex representation of the code point.

const combining = "Ba\u0323n kho\u0309e kho\u0302ng?"

// Note the difference in string lengths

console.log(nonCombining.length);

//> 15

console.log(combining.length);

//> 18

// Doing a string comparison doesn't work.

console.log(nonCombining === combining);

//> false

// Normalize the results for a true comparison.

console.log(nonCombining.normalize() === combining.normalize());

//> true

// Normalizing here combines the combining marks.

console.log(combining.normalize().length == nonCombining.length);

//> true

Normalization of combining and non-combining marks

The magic in the last calls with .normalize() is that it runs the Unicode normalization algorithm to create equivalent version of the text by combining the code points. When two or more code points turn into a single one, this is called composition. It’s also possible to turn a single code point back into multiple with decomposition.

Normalization according to Unicode can be configured to work in either direction of composition or decomposition. Let’s take a moment to better understand what is happening with the String.prototype.normalize can also be configured with a specific strategy. function.

According to MDN the normalize function takes one optional parameter, the form argument which takes one of the following four values:

"NFC" – Canonical Decomposition, followed by Canonical Composition.

"NFD" – Canonical Decomposition.

"NFKC" – Compatibility Decomposition, followed by Canonical Composition.

"NFKD" – Compatibility Decomposition.

When I first came across this, it took me quite some time to figure out what these terms meant, and I hope to simplify the jargon here. The two most interesting forms to consider for our purposes are NFC and NFD. These stand for “normalization form composition” and “normalization form decomposition”. You might have some intuition as to what these two modes of normalization will do.

First, let’s consider the (made up) grapheme cluster that produces an o with a breve mark both above and below. In the input box below I will include multiple ways to describe this character:

Composed form

Let’s start with the composed form. There’s only one way to express this, the LATIN SMALL LETTER O WITH BREVE is followed by the COMBINING BREVE BELOW.

Decomposed form #1

The decomposed form uses the normal LATIN SMALL LETTER O, and then adds both combining marks afterwards.

Decomposed form #2

However, the ordering here is arbitrary. This same grapheme cluster can be described with the two combining marks switched.

The decomposed form – the NFD strategy

First let’s consider the second strategy listed above for normalization, the NFD strategy. This is defined as “canonical decomposition”. Decomposing breaks apart the combining marks into their separate codepoints forms, and orders them in a canonical way. This means that only one of the decomposed forms above will match after being normalized with this strategy. This happens to be the decomposed form #2.

Here is code that shows this in the web console.

const composed = "ŏ\u032E";

const decomposed1 = "o\u0306\u032E";

const decomposed2 = "o\u032E\u0306";

// Normalize the text into the decomposed form:

console.log(composed.normalize("NFD") === decomposed2); // true

console.log(decomposed1.normalize("NFD") === decomposed2); // true

console.log(decomposed2.normalize("NFD") === decomposed2); // true

The composed form – the NFC strategy

Next, let’s apply the NFC strategy. This strategy is defined as “canonical decomposition, followed by canonical composition.” Let’s break that down and work through the ŏ̮ above.

The first step is canonical decomposition which was described above. Now that the code points are in their canonical decomposed form, the code points go through “canonical composition”. Given the various three forms of ŏ̮ above, we have three identical decomposed representations, and when combined produces the “composed form” as listed above. Explicitly the LATIN SMALL LETTER O and COMBINING BREVE get composed into the LATIN SMALL LETTER O WITH BREVE.

const composed = "ŏ\u032E";

const decomposed1 = "o\u0306\u032E";

const decomposed2 = "o\u032E\u0306";

// Normalize the text into the composed form:

console.log(composed.normalize("NFC") === composed); // true

console.log(decomposed1.normalize("NFC") === composed); // true

console.log(decomposed2.normalize("NFC") === composed); // true

A quick aside about decomposition

While decomposition can break a single code point into multiple combining code points, it can also map a single code point to another single code point. This is relatively rare in Latin scripts but one quick example is the Latin Kelvin sign K which is code point U+212A. It has a canonical decomposition to the Latin capital letter K which has the code point U+004B.

As can be seen from this Kelvin example, performing normalization from NFC to NFD and back to NFC does not guarantee a round trip back to the same code points.

Other code ecosystems

Normalization isn’t unique to the JavaScript ecosystem. Consider this python code:

import unicodedata

# Use "NFC" to compose the codepoints.

unicodedata.normalize("NFC", "Ba\u0323n kho\u0309e kho\u0302ng?")

# "Bạn khỏe không?"

# Use "NFD" to decomposecompose the codepoints.

unicodedata.normalize("NFD", "Bạn khỏe không?")

# "Ba\u0323n kho\u0309e kho\u0302ng?"

Normalization on the web

While there are many different orders and formats to represent text, content authors are recommended to use the composed NFC form in their text. This is an explicit recommendation from the W3C, which also recommends consistent usage of ordering of code points by content authoring systems. Most content is in fact authored in a form close to NFC. However, there are not strong guarantees that this will be done, or that NFC forms are available for a given language or script.

How normalization affects your own code

If you are writing truly internationalized software, any input validation for string comparison must handle normalization in order to give correct results. As shown above, combining diacritics don’t have to be in the same order. Normalization ensures a canonical representation of the text.

Normalization is part of the process for sorting text (collation) as well. Collation is a bigger topic, and includes locale-specific sorting rules. When I first interacted with SQL databases when I was younger, these collation settings were quite mysterious, but it turns out getting them correct is important for internationalized software.

If you need to know more about these normalization strategies I recommend squinting very slowly at the Unicode spec that outlines them.

Non-Latin scripts

Vietnamese is certainly a non-Western language, but it is using a Western (Latin) script. Below are the top scripts actively used in the world listed according to the population size that is actively using them.

| Rank | Script | Example | Population Using |

|---|---|---|---|

| 1 | Latin | Latin | Lots |

| 2 | Chinese | 汉字 | 1,340,000,000 |

| 3 | Arabic | العربية | 660,000,000 |

| 4 | Devanagari | देवनागरी | 608,000,000 |

| 5 | Bengali–Assamese | বাংলা-অসমীয়া | 265,000,000 |

| 6 | Cyrillic | Кирилица | 250,000,000 |

Let’s pick the fourth most popular script in the world, Devanagari. It’s used in places like India for various languages including Hindi. The script has some interesting properties where combining marks must be used and there is no equivalent single code point. Below is the phrase “how are you” (thanks Google Translate.)

The Devanagari script is what’s known as an abugida writing system. This indicates that vowels are not explicitly written as separate symbols, but are written as a unit of consonant-vowel sequences. Each unit is made up of the primary consonant plus a secondary vowel notation. Examining the output above, the combining vowel marks are being applied to the preceeding (consonant) letter.

We can actually verify that there is no combined code point that can represent this text by running the Unicode normalization algorithm. From the web console you can do this quickly.

console.log("आप कैसे हैं".length);

// 11

console.log("आप कैसे हैं".normalize().length);

// 11

After normalization, the length of the string is still exactly the same. This shows that combining code points are required to represent certain concepts as Unicode is designed.

Combining marks in emojis

Diacritics aren’t the only use of combining marks. The poster child of Unicode that most people know it for is the emoji. Combining marks can be used to customize the emojis, making for a more inclusive character set that people can use. Here let’s examine the thumbs up emoji:

The emojis combine using designators from the Fitzpatrick scale which denote a numeric way to quantify human skin tone.

Other combining codepoints in emojis

For a more complicated example, consider the three mages below. There is the non-gendered mage, and then a female and male mage.

Let’s break down all of the code points for the female mage emoji. The MAGE emoji code point is followed by the ZERO WIDTH JOINER. This code point doesn’t display on the screen, but specifies that the following code point will join with the next one. Following that is the FEMALE SIGN code point. The character displayed in the table for the female sign is the text representation. In order to emojify it, the final code point in the grapheme cluster is the VARIATION SELECTOR-16. This specifies that the preceding code point should be treated as the emoji form, not text form. That’s quite a few code points to describe a single emoji.

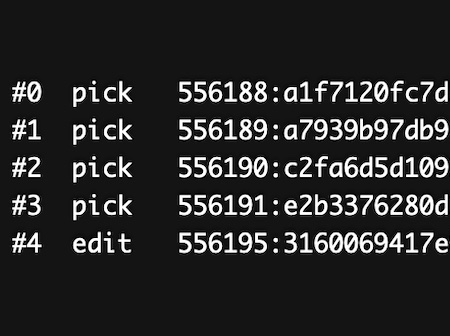

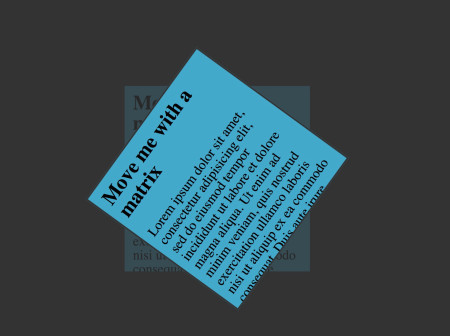

Time to mess with the tech

It’s time to do things that we technically can, but probably shouldn’t with these combining marks. Before abusing this new power, please know that this technique breaks accessibility for users of screen readers. It’s fun to play around with the technology, but I would limit it to code playgrounds and not real-world uses.

To play with combining marks, it’s not really feasible to type these code points directly with your keyboard, so I like being able to get them into my copy and paste buffer. To do this I open my web console and type:

// A codepoint can be manually typed in with a \u followed

// by the hex number. The `copy` function is only available

// in the web console. Make sure not to enter the decimal value!

// This only works for code points ranged 0x0000 - 0xffff.

copy("\u0301");

// Or it can be constructed from a series of hex values. This

// works for the full range of code points.

copy(String.fromCodePoint(0x0041, 0x0301))

// "Á"

Now review the list of combining diacritical marks and the supplement to see the different diacritic code points to play with.

Feel free to try out your new powers to combine marks together by pasting them into existing text.

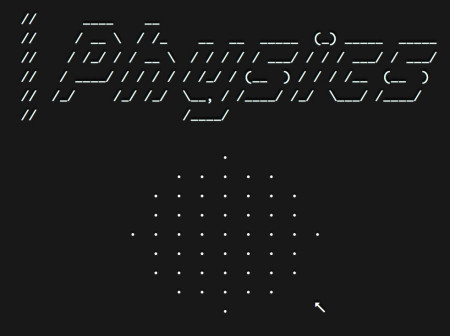

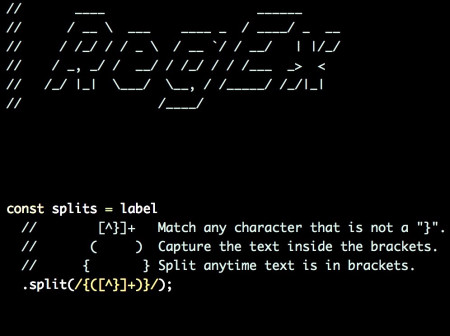

Chaos Mode

Now for more evil, let’s write some JavaScript.

/**

* Adds a chaotic number of diacritics to a string.

*/

function diacriticChaosMode(string, diacriticsCount = 5) {

// Use the Unicode block for combining diacritics.

// https://en.wikipedia.org/wiki/Combining_Diacritical_Marks

const blockRangeMin = 0x0300

const blockRangeMax = 0x036F;

const blockRangeSize = blockRangeMax - blockRangeMin;

// Build up a list of letters.

let result = "";

for (const letter of string.split("")) {

// Add on the base letter.

result += letter;

// Randomly add on a certain number of diacritics.

const iterations = Math.random() * diacriticsCount;

for (let i = 0; i < iterations; i++) {

// Pick a random combining code point.

const codePoint = blockRangeMin + Math.floor(

blockRangeSize * Math.random()

);

// Concatenate the string parts.

result += String.fromCodePoint(codePoint);

}

}

return result;

}

diacriticChaosMode("Greg Tatum");

// "Ḡ͓̟̟r̬e̱̬͔͑g̰ͮ̃͛ ̇̅T̆a̐̑͢ṫ̀ǔ̓͟m̮̩̠̟"

Try it out

The following text input lets you type or paste text dynamically to apply this chaos mode.

The importance of combining characters

Diacritical marks are used in many languages and scripts across the world. They add to the complexity of writing internationalizable software, but it’s important to get it right in order to support more of the world in the modern technological era.

These combining diacritical marks aren’t the only combining code points that form grapheme clusters. As seen in emojis, different code points are combined together to create new emojis. The complexity of combining marks isn’t limited to just emojis. For instance in the Devanagari script there are certain codepoints that can be used to glue characters before and after together.

If you enjoyed this article I would suggest reading more in my Unicode and i18n series.