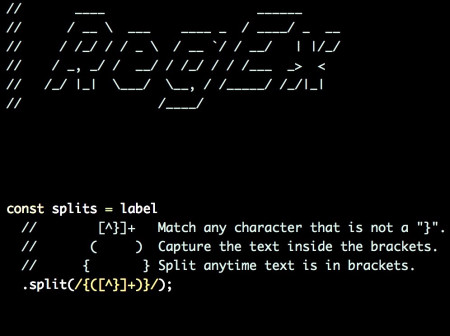

Encoding Text, UTF-32 and UTF-16 – How Unicode Works (Part 1)

The standard for how to represent human writing for many years was ASCII, or American Standard Code for Information Interchange. This representation reserved 7 bits for encoding a character. This served early computing well, but did not scale as computers were used in more and more languages and cultures across the world. This article explains how this simple encoding grew into a standard that aims to represent the writing systems of every culture on Earth. This is Unicode.

At the time of this writing, I recently switched to the Firefox Internationalization team and began working on a Unicode sub-committee to help implement ICU4X. This involves reading lots of specs, and I wanted to take the time to write out my interest in the encodings of UTF-8, 16, and 32.

From first principles, let’s design our own simple encoding of the English language. Let’s see how many bits we need to encode the alphabet. Representing a to z can be done with 26 numbers. So let’s do that.

Imaginary 5 bit Character Encoding

Letter Number Hex Binary

------ ------ ---- ------

a 0 0x00 0

b 1 0x01 1

c 2 0x02 10

d 3 0x03 11

e 4 0x04 100

f 5 0x05 101

g 6 0x06 110

h 7 0x07 111

i 8 0x08 1000

j 9 0x09 1001

k 10 0x0a 1010

l 11 0x0b 1011

m 12 0x0c 1100

n 13 0x0d 1101

o 14 0x0e 1110

p 15 0x0f 1111

q 16 0x10 10000

r 17 0x11 10001

s 18 0x12 10010

t 19 0x13 10011

u 20 0x14 10100

v 21 0x15 10101

w 22 0x16 10110

x 23 0x17 10111

y 24 0x18 11000

z 25 0x19 11001

At this point, we’ve needed 5 bits to represent 26 letters. However, we still have 6 values (or “code points”) left, 26-31 if written as base 10 numbers, or 0x1a to 0x1f if written as base 16. We can shove a few useful characters in the remaining bits.

Character Number Hex Binary

--------- ------ ---- ------

. 26 0x1a 11010

, 27 0x1b 11011

' 28 0x1c 11100

" 29 0x1d 11101

$ 30 0x1e 11110

! 31 0x1f 11111

Our 5 bits are enough for encoding our somewhat bad character set. However, the early ASCII encoding system only needed 7 bits, which is 2 more than our encoding. Modern processors naturally operate on bits that come in multiples of 8. This unit of operation is known as a “word”. For instance my current laptop has a word size of 64 bits. So when my processor adds two numbers, it takes two 64 bit numbers, and runs the add operation.

When ASCII was invented a common word size was 8 bits. In fact, the hole punched tape frequently used with computers at the time had 8 holes (or bits) per line. However, the committee decided that 7 bits was a better choice, because transmissions of bits took both time and money, and so 7 bits were sufficient for their character set needs. The 7 bit encoding could handle values 0 to 127, or 0x00 to 0x7f. This is 96 more characters that we can represent if we had a 7 bit encoding scheme rather than our 5 bit scheme.

Rather than fill out the imaginary encoding above, it may be useful to examine the complete ASCII encoding scheme.

Complete ASCII Encoding

---------------------- ----------------------- --------------------- -----------------------

NUL 0 0x00 00000000 " " 32 0x20 00100000 @ 64 0x40 01000000 ` 96 0x60 01100000

SOH 1 0x01 00000001 ! 33 0x21 00100001 A 65 0x41 01000001 a 97 0x61 01100001

STX 2 0x02 00000010 " 34 0x22 00100010 B 66 0x42 01000010 b 98 0x62 01100010

ETX 3 0x03 00000011 # 35 0x23 00100011 C 67 0x43 01000011 c 99 0x63 01100011

EOT 4 0x04 00000100 $ 36 0x24 00100100 D 68 0x44 01000100 d 100 0x64 01100100

ENQ 5 0x05 00000101 % 37 0x25 00100101 E 69 0x45 01000101 e 101 0x65 01100101

ACK 6 0x06 00000110 & 38 0x26 00100110 F 70 0x46 01000110 f 102 0x66 01100110

BEL 7 0x07 00000111 ' 39 0x27 00100111 G 71 0x47 01000111 g 103 0x67 01100111

BS 8 0x08 00001000 ( 40 0x28 00101000 H 72 0x48 01001000 h 104 0x68 01101000

HT 9 0x09 00001001 ) 41 0x29 00101001 I 73 0x49 01001001 i 105 0x69 01101001

LF 10 0x0A 00001010 * 42 0x2A 00101010 J 74 0x4A 01001010 j 106 0x6A 01101010

VT 11 0x0B 00001011 + 43 0x2B 00101011 K 75 0x4B 01001011 k 107 0x6B 01101011

FF 12 0x0C 00001100 , 44 0x2C 00101100 L 76 0x4C 01001100 l 108 0x6C 01101100

CR 13 0x0D 00001101 - 45 0x2D 00101101 M 77 0x4D 01001101 m 109 0x6D 01101101

SO 14 0x0E 00001110 . 46 0x2E 00101110 N 78 0x4E 01001110 n 110 0x6E 01101110

SI 15 0x0F 00001111 / 47 0x2F 00101111 O 79 0x4F 01001111 o 111 0x6F 01101111

DLE 16 0x10 00010000 0 48 0x30 00110000 P 80 0x50 01010000 p 112 0x70 01110000

DC1 17 0x11 00010001 1 49 0x31 00110001 Q 81 0x51 01010001 q 113 0x71 01110001

DC2 18 0x12 00010010 2 50 0x32 00110010 R 82 0x52 01010010 r 114 0x72 01110010

DC3 19 0x13 00010011 3 51 0x33 00110011 S 83 0x53 01010011 s 115 0x73 01110011

DC4 20 0x14 00010100 4 52 0x34 00110100 T 84 0x54 01010100 t 116 0x74 01110100

NAK 21 0x15 00010101 5 53 0x35 00110101 U 85 0x55 01010101 u 117 0x75 01110101

SYN 22 0x16 00010110 6 54 0x36 00110110 V 86 0x56 01010110 v 118 0x76 01110110

ETB 23 0x17 00010111 7 55 0x37 00110111 W 87 0x57 01010111 w 119 0x77 01110111

CAN 24 0x18 00011000 8 56 0x38 00111000 X 88 0x58 01011000 x 120 0x78 01111000

EM 25 0x19 00011001 9 57 0x39 00111001 Y 89 0x59 01011001 y 121 0x79 01111001

SUB 26 0x1A 00011010 : 58 0x3A 00111010 Z 90 0x5A 01011010 z 122 0x7A 01111010

ESC 27 0x1B 00011011 ; 59 0x3B 00111011 [ 91 0x5B 01011011 { 123 0x7B 01111011

FS 28 0x1C 00011100 < 60 0x3C 00111100 \ 92 0x5C 01011100 | 124 0x7C 01111100

GS 29 0x1D 00011101 = 61 0x3D 00111101 ] 93 0x5D 01011101 } 125 0x7D 01111101

RS 30 0x1E 00011110 > 62 0x3E 00111110 ^ 94 0x5E 01011110 ~ 126 0x7E 01111110

US 31 0x1F 00011111 ? 63 0x3F 00111111 _ 95 0x5F 01011111 DEL 127 0x7F 01111111

This table presents some interesting “letters” such as NUL 0x00, BEL 0x07, and BS 0x08. These are known as control characters. Our imaginary 5 bit encoding had no need for these non-printing characters, but early ASCII found them useful for transmitting information to terminals. NUL 0x00 is used in languages like C to signal the end of a string. BEL 0x07 could be used to signal to a device to emit a sound or a flash. BS 0x08 or backspace, may overprint the previous character.

Moving beyond English

For this entire article so far, I’ve typed only letters that could be represented in ASCII. As a US citizen whose native language is English, this is pretty useful. However, the world is much bigger than that. I speak Spanish as well, and I simply can’t represent ¡Hola! ¿Qué tal? in just ASCII. But hold on, ASCII only contains 7 bits, and modern computing typically has word sizes that are multiples of 8 bits. Expanding the range from 7 bits to 8 bits expands the range of symbols from 128 to 256. Now we can add more letters into that extra bit and represent ¡ ¿ é. This encoding is known as the Latin 1 encoding, or ISO/IEC 8859-1. This encoding includes control characters, but it expands the number of printing characters to 191.

Character Number Hex Binary

--------- ------ ---- --------

¡ 161 0xa1 10100001

¿ 191 0xbf 10111111

é 233 0xe9 11101001

^ the 8th bits are all 1 for these new characters

What’s noteable is that with adding only 1 more bit, we can now mostly represent many more languages across the world. Not only that, but Latin 1 is backwards compatible with ASCII. This means legacy documents can still be interpreted just fine.

Languages (arguably) supported by Latin 1: Afrikaans, Albanian, Basque, Breton, Corsican, English, Faroese, Galician, Icelandic, Irish, Indonesian, Italian, Leonese, Luxembourgish, Malay, Manx, Norwegian, Occitan, Portuguese, Rhaeto-Romanic, Scottish, Gaelic, Scots, Southern, Sami, Spanish, Swahili, Swedish, Tagalog, and Walloon.

Supporting all of human culture

We started with an imaginary 5 bit encoding scheme, moved on to 7 bit ASCII, and finally to an 8 bit Latin 1 encoding. How many more bits do we need to theoretically support all known and existing human cultures. This is a pretty tall order, but also a noble goal.

Enter Unicode.

Unicode aims to be a universal character encoding. At this point UTF-8 (or Unicode Text Format, 8 bits) is the de-facto winner in encoding text, especially on the internet. So what is Unicode precisely? According Unicode’s FAQ:

Unicode covers all the characters for all the writing systems of the world, modern and ancient. It also includes technical symbols, punctuations, and many other characters used in writing text. The Unicode Standard is intended to support the needs of all types of users, whether in business or academia, using mainstream or minority scripts.

Our imaginary 5 bit system needed to encode 26 letters of the lowercase English alphabet, while Unicode can support 1,114,112 different code points. Now it’s time to define a code point. This is the number representing some kind of character that is being encoded. Keep in mind that in ASCII, not all of these code points represented printable characters, like the BEL. This is true in Unicode, with even stranger types of code points.

It’s customary to display code points in hex representation. So for instance 0x61 is the hex value of the code point for "a" in ASCII. In base-10 notation this would be the number 97. Unicode is backwards compatible with ASCII, and to signify that the code point represents a Unicode code point, i’s hex value is typically prefixed with U+. So the “Latin Small Letter A” is designated as U+0061 in Unicode.

Here are some examples of different Unicode characters and their respective code points. Note that so far, all of these code points are completely backwards compatible with ASCII and Latin 1. However, there are still potentially 1,114,112 different code points, ranging from 0x00 to 0x10FFFF that can be represented in Unicode.

ASCII Range Latin1 Range

----------- ------------

U+0041 A U+00C0 À

U+0042 B U+00C1 Á

U+0043 C U+00C2 Â

U+0061 a U+00E0 à

U+0062 b U+00E1 á

U+0063 c U+00E2 â

How to fit 1,114,112 code points

In the 5 bit encoding scheme we could fit 32 code points. Let’s examine how many bits can fit into different sized words.

Type Size Type Name Max Code Points Visual Bit Size

--------- ----------- --------------- ---------------------------------------

7 bits (historic) 128 000_0000

8 bits u8 256 0000_0000

16 bits u16 65,536 0000_0000_0000_0000

32 bits u32 4,294,967,296 0000_0000_0000_0000_0000_0000_0000_0000

From here on out, I’m going to use the Rust type names u8, u16, and u32 for the unsigned integer types that are used to encode the code points.

Encoding code points – UTF-32

From here, it’s important to understand the distinction that the code points are documented with hex values, but that doesn’t necessarily mean that is how they are actually represented in binary. It’s time to discuss encodings.

The simplest encoding method for Unicode is UTF-32, which uses a u32 for each code point. The main advantage for this approach is its simplicity, it’s fixed length. If you have an array of characters, all you have to do is index the array at some arbitrary point, and you are guaranteed that there is a code point there that matches your index position. The biggest problem here is the waste of space. Modern computing has word sizes divisible by 8. Let’s examine the bit layout of u32. (Note, I’m ignoring byte endianness for the sake of simplicity here.)

The max code points that can be represented in a u32 according to the table above is 4,394,967,296, which is way more space than is needed by Unicode’s 1,114,112 potential code points.

UTF-32 encoding wastes at least 11 bits per code point, as can be seen by this table:

Size Max Code Points Visual Bit Size

-------- --------------- --------------------------------------

Not quite enough space: 20 bits 1,048,576 0000_0000_0000_0000_0000

Minimum to fit Unicode: 21 bits 2,097,152 0_0000_0000_0000_0000_0000

Actual u32 size: 32 bits 4,294,967,296 0000_0000_0000_0000_0000_0000_0000_0000

Wasted space: 11 bits ^^^^ ^^^^ ^^^

It’s really even worse in terms of representing common English texts. Consider the word "Hello" – the encoding visually looks like:

UTF-32 ASCII

===================================================== ==============

Hex Binary Hex Binary

----------- --------------------------------------- ---- --------

H 0x0000_0048 0000_0000_0000_0000_0000_0000_0100_1000 0x48 100_1000

e 0x0000_0065 0000_0000_0000_0000_0000_0000_0110_0101 0x65 110_0101

l 0x0000_006c 0000_0000_0000_0000_0000_0000_0110_1100 0x6c 110_1100

l 0x0000_006c 0000_0000_0000_0000_0000_0000_0110_1100 0x6c 110_1100

o 0x0000_006f 0000_0000_0000_0000_0000_0000_0110_1111 0x6f 110_1111

The UTF-32 memory for this short string is primarily filled with zeros. This is a pretty hefty memory price to pay for a simple implementation. The next encodings will present more memory-optimized solutions.

Saving space with UTF-16

Instead of using a u32, let’s attempt to encode our codepoints in a smaller base unit, the 16 bit u16.

Size Max Code Points Visual Bit Size

-------- --------------- -------------------------

Size of a u16: 16 bits 65,536 0000_0000_0000_0000

Minimum to fit Unicode: 21 bits 2,097,152 0_0000_0000_0000_0000_0000

Bits needed for Unicode: 5 bits ^ ^^^^

Now we have a different sort of problem, there aren’t quite enough bits to fully represent the Unicode code point space. Let’s ignore this for a moment, and see how our word "Hello" encodes into UTF-16.

UTF-16 ASCII

============================ ==============

Hex Binary Hex Binary

------ ------------------- ---- --------

H 0x0048 0000_0000_0100_1000 0x48 100_1000

e 0x0065 0000_0000_0110_0101 0x65 110_0101

l 0x006c 0000_0000_0110_1100 0x6c 110_1100

l 0x006c 0000_0000_0110_1100 0x6c 110_1100

o 0x006f 0000_0000_0110_1111 0x6f 110_1111

The memory size is already looking much better! Now we only have a few bytes of “wasted” zero values. There is still the problem of those pesky missing 5 bits, but for now let’s see what we can represent with a single u32. For this we’ll take a small digression into looking at how Unicode organizes it’s code points.

Unicode Blocks

The first term to discuss is the Unicode block. This is a basic organization block for organizing characters. It is a block of contiguous code points that represents some kind of logical grouping of code points. The blocks are not uniform in size.

The first block should be familiar. It’s the Basic Latin code block. This is the range U+0000 to U+007F, and is backwards compatible with ASCII. The next code block is the Latin-1 Supplement. Together with Basic Latin these two are backwards compatible with the ISO/IEC 8859-1 Latin 1 encoding.

These blocks don’t stop there, and quickly diverge from the familiar Latin-based characters, to the most common scripts that are used across the globe. The table below contains samples of these blocks (a full listing is available here).

----------------------------------- -------------------------- ---------------------------------------

U+0000 - U+007F Basic Latin U+0E00 - U+0E7F Thai U+2000 - U+206F General Punctuation

U+0080 - U+00FF Latin-1 Supplement U+0E80 - U+0EFF Lao U+2190 - U+21FF Arrows

U+0370 - U+03FF Greek and Coptic U+0F00 - U+0FFF Tibetan U+2200 - U+22FF Mathematical Operators

U+0400 - U+04FF Cyrillic U+1000 - U+109F Myanmar U+2580 - U+259F Block Elements

U+0530 - U+058F Armenian U+13A0 - U+13FF Cherokee U+25A0 - U+25FF Geometric Shapes

U+0590 - U+05FF Hebrew U+1800 - U+18AF Mongolian U+2700 - U+27BF Dingbats

U+0600 - U+06FF Arabic U+1B00 - U+1B7F Balinese U+2C80 - U+2CFF Coptic

U+0700 - U+074F Syriac U+1B80 - U+1BBF Sundanese U+3040 - U+309F Hiragana

U+0980 - U+09FF Bengali U+1BC0 - U+1BFF Batak U+30A0 - U+30FF Katakana

U+0B80 - U+0BFF Tamil U+1C00 - U+1C4F Lepcha U+FFF0 - U+FFFF Specials

... ... ...

Unicode planes

Now the next unit of organization beyond blocks, is the plane. This term is more tightly coupled to the idea of memory. Each plane is a fixed size of 63,536 code points. This fits perfectly into a u16. The blocks listed above all fit into the first plane, the “Basic Multilingual Plane”. Most of the planes are still unassigned, and a full listing can be found here.

Plane Range

-------------------------------- ---------------------

Basic Multilingual Plane 0x00_0000 - 0x00_FFFF

Supplementary Multilingual Plane 0x01_0000 - 0x01_FFFF

Supplementary Ideographic Plane 0x02_0000 - 0x02_FFFF

Tertiary Ideographic Plane 0x03_0000 - 0x03_FFFF

...all other planes... 0x04_0000 – 0x10_FFFF

Fitting Unicode into a u16

The Basic Multilingual Plane contains the most common scripts in use today. It’s also quite interesting that a single plane fits into a u16. Given these two constraints, let’s encode some more interesting characters into UTF-16. For this lets take the Japanese hiragana characters となりのトトロ which make up the movie title for “My Neighbor Totoro”.

=====================================

Hex Binary Hiragana

------ ----------------- --------

U+3068 00110000_01101000 と

U+306a 00110000_01101010 な

U+308a 00110000_10001010 り

U+306e 00110000_01101110 の

U+30c8 00110000_11001000 ト

U+30c8 00110000_11001000 ト

U+30ed 00110000_11101101 ロ

Inspecting these values we can see that there are far fewer zeros in it. The low byte is 00110000 or 0x30. Looking at the basic multilingual plane above, we can see that this fits into the U+3040 - U+309F Hiragana range of code points. So this is great, rather than just Latin characters, we can represent Japanese scripts, amongst many others. While the values 00110000 are repeating, they are not the seemingly “wasted space” of the 00000000 from the high byte of the Latin1 and ASCII encoded code points.

This is all great, but leaves the question, what happens if you want to encode something that is outside of the basic multilingual plane? For that we will examine the following emoji:

👍 U+1F44D

Copying and pasting this emoji into your search engine of choice, you can quickly look up the code point for a given symbol, which here is U+1F44D. The first thing you may notice is that the value for this code point is beyond the Basic Multilingual Plane. Referring to the planes table above, we can identify that the range 0x01_0000 to 0x01_FFFF encompasses this code point value of 0x01_F44D. This plane is the Supplementary Multilingual Plane.

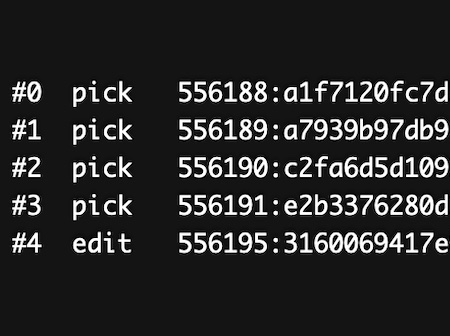

For a hint of how this code point is encoded, open up the web console if your browser has it, and type the following code:

"👍".length

>> 2

A single character requires two u16 values to encode it.

Code units, code points, and high and low surrogates

I’m glad I did not lead this article with the title, or else my readers would have stopped right there. However, if you’ve followed along so far, let’s dive in.

Technically UTF-16 is a variable length format, and indexing into an array does not give you a code point but a code unit. The reason for this is because of higher-plane values like the 👍. This single code point requires two code units. Let’s open up the web console again and poke at some implementation details.

"👍"[0]

>> "\ud83d"

"👍"[1]

>> "\udc4d"

=======================================

Hex Binary Emoji

-------- ------------------- ------

0x1_F44D 1_11110100_01001101 "👍"

0x0_D83D 11011000_00111101 "👍"[0]

0x0_DC4D 11011100_01001101 "👍"[1]

Here indexing into the emoji reveals two different code units. The first is U+D83D, and the second is U+DC4D. Right away we can see that both of these fit into the Basic Multilingual Plane, so it’s time to look up the code block to which they belong in that plane. The first is in range of the High Surrogates code block (U+D800 - U+DBFF) while the second is in range of the Low Surrogates (U+DC00 - U+DFFF).

Now for the magic: A high and low surrogate code unit can be combined together using bit math to form the higher plane code point that would not normally fit in a u16. The following table illustrates this visually.

===================================================

Code Block Binary

-------------- ------------- -----------------

High Surrogate Min Range 11011000_00000000

High Surrogate Max Range 11011011_11111111

High Surrogate "Leading tag" 110110___________

High Surrogate Encoded Value ______11_11111111

Low Surrogate Min Range 11011100_00000000

Low Surrogate Max Range 11011111_11111111

Low Surrogate "Leading tag" 110111___________

Low Surrogate Encoded Value ______11_11111111

U+D83D High Surrogate 11011000_00111101 "👍"[0]

U+D83D "Leading tag" 110110 "👍"[0]

U+D83D Encoded value 00_00111101 "👍"[0]

U+DC4D Low Surrogate 11011100_01001101 "👍"[1]

U+DC4D "Leading tag" 110111 "👍"[1]

U+DC4D Encoded value 00_01001101 "👍"[1]

0000_111101 The high surrogate "encoded value".

00_01001101 Combined with the low surrogate "encoded value".

1_00000000_00000000 Magically add 0x10000.

1_11110100_01001101 Matches the 👍 code point.

To verify this we can write some code in the web console to do this operation. Feel free to skip this example if you haven’t done bit fiddling operations before.

function combineSurrogates(high, low) {

// Validate the high surrogate.

if ((high >> 10) !== 0b110110) {

throw new Error("Not a high surrogate.");

}

// Validate the low surrogate.

if ((low >> 10) !== 0b110111) {

throw new Error("Not a low surrogate.");

}

// Remove the "leading tag" to only keep the value.

const highValue = high & 0b11_11111111;

const lowValue = low & 0b11_11111111;

const magicAdd = 0b1_00000000_00000000;

// Combine the high and low values together using bit operations.

const codePoint = (highValue << 10) | lowValue + magicAdd;

// Transform the code point into a string.

return String.fromCodePoint(codePoint);

}

combineSurrogates(0xd83d, 0xdc4d);

// "👍"

We can also do the reverse of turning a code point into a high and low surrogate pair.

function toSurrogatePair(codePoint) {

if (codePoint < 0x1_0000) {

throw new Error("Code point is in the basic multilingual plane.");

}

// Reverse the magical add of 0b1_00000000_00000000 from the code point.

const magicAdd = 0x1_0000;

const transformed = codePoint - magicAdd;

// Compute the high and low values.

const highValue = transformed >> 10;

const lowValue = transformed & 0b11_11111111;

// Generate the tag portion of the surrogates.

const highTag = 0b110110 << 10;

const lowTag = 0b110111 << 10;

// Combine the tags and the values.

const highSurrogate = highTag | highValue;

const lowSurrogate = lowTag | lowValue;

return [highSurrogate, lowSurrogate];

}

toSurrogatePair(0x1_F44D);

// [ 0xd83d, 0xdc4d ]

Poking more at JavaScript’s UTF-16

Looking into more of how JavaScript encodes the values, you can see other interesting patterns. First let’s build a function that will output a nicely formatted text table of the UTF-16 encoded values.

function getCodeUnitsTable(utf16string) {

// Use console.table to display the results.

let results = `

===================================

Code Binary Letter

------ ------------------- ------

`;

for (let i = 0; i < utf16string.length; i++) {

// Use the String.prototype.codePointAt method to inspect the underlying code unit.

// https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/String/codePointAt

//

// Aside: codePointAt is slightly smart with surrogate pairs, but here using the

// utf16string[i].codePointAt(0) pattern will give us the code unit rather than

// code point.

const letter = utf16string[i];

const codeUnit = letter.codePointAt(0);

let binary = codeUnit.toString(2).padStart(16, '0');

binary = "0b" + binary.slice(0, 8) + '_' + binary.slice(8)

const hex = "0x" + codeUnit.toString(16).padStart(4, '0');

results += `${hex} ${binary} ${letter}\n`;

}

return results;

}

Now we can inspect some strings. First off is a Latin1 string.

getCodeUnitsTable("¡Hola! ¿Qué tal?");

===================================

Code Binary Letter

------ ------------------- ------

0x00a1 0b00000000_10100001 ¡

0x0048 0b00000000_01001000 H

0x006f 0b00000000_01101111 o

0x006c 0b00000000_01101100 l

0x0061 0b00000000_01100001 a

0x0021 0b00000000_00100001 !

0x0020 0b00000000_00100000

0x00bf 0b00000000_10111111 ¿

0x0051 0b00000000_01010001 Q

0x0075 0b00000000_01110101 u

0x00e9 0b00000000_11101001 é

0x0020 0b00000000_00100000

0x0074 0b00000000_01110100 t

0x0061 0b00000000_01100001 a

0x006c 0b00000000_01101100 l

0x003f 0b00000000_00111111 ?

From here we can see that the high byte for the entire string is still just 0. If we ignore the high byte, and only use the low byte values, this would be a valid Latin1 ISO/IEC 8859-1 string. In fact, this is an optimization that JavaScript engines already do, since they are required to operate with UTF-16 strings. Inspecting SpiderMonkey’s source shows frequent mentions of Latin1 encoded strings. This creates some additional complexity, but can cut the string storage size in half for web apps that use Latin1 strings. This would change the encoding from using a u16 to a u8 for these special strings.

Of course, the internet is a global resource, so much of the content is not Latin1 encoded. Repeating the example of “My Neighbor Totoro” text from above, you can see that a JavaScript engine would need to use the full u16. These characters are still in the basic multilingual plane.

getCodeUnitsTable("となりのトトロ");

==================================

Code Binary Letter

------ ------------------ ------

0x3068 0b0011000001101000 と

0x306a 0b0011000001101010 な

0x308a 0b0011000010001010 り

0x306e 0b0011000001101110 の

0x30c8 0b0011000011001000 ト

0x30c8 0b0011000011001000 ト

0x30ed 0b0011000011101101 ロ

However, going beyond to the supplementary multilingual plane. Let’s look at some Egyption hieroglyphs using our function above.

getCodeUnitsTable("𓃓𓆡𓆣𓂀𓅠");

Running this code now creates a tricky situation. I can’t directly paste the results into my editor window. This happens because the text has surrogate pairs. When we try to create the text output using getCodeUnitsTable above, this generates _invalid code units__. A high surrogate must have a matching low surrogate, or programs get angry (or at least they should do something to handle it). The fact that untrusted code units can be malformed UTF-16 is a great way to introduce bugs into a system. Because of this, you shouldn’t naively take in UTF-16 input without validating its encoding. Browsers do a lot here to protect users from these encoding issues.

Here is the slightly amended table that my editor will actually let me paste into in this document.

==============================================

Code Binary Letter Surrogate

------ ------------------- ------ ---------

0xd80c 0b11011000_00001100 \ud80c High

0xdcd3 0b11011100_11010011 \udcd3 Low

0xd80c 0b11011000_00001100 \ud80c High

0xdda1 0b11011101_10100001 \udda1 Low

0xd80c 0b11011000_00001100 \ud80c High

0xdda3 0b11011101_10100011 \udda3 Low

0xd80c 0b11011000_00001100 \ud80c High

0xdc80 0b11011100_10000000 \udcb0 Low

0xd80c 0b11011000_00001100 \ud80c High

0xdd60 0b11011101_01100000 \udd60 Low

In order to inspect the actual code points in UTF-16 encoded JavaScript, we need to build a separate utility that knows the difference between code units, and code points. It needs to properly handle surrogate pairs.

function getCodePointsTable(string) {

let results = `

==============================

Code Point Surrogates Letter

---------- ---------- ------

`;

// Loop through the code units.

for (let i = 0; i < string.length; i++) {

// The codePointAt function will correctly read the high and low surrogate to get

// the code point, and not the code unit. However, the index used is still the code

// unit index, not the code point index.

const value = string.codePointAt(i);

// Format the code point nicely.

let codePoint = value.toString(16).padStart(6, '0');

codePoint = 'U+' + codePoint.slice(0, 2) + '_' + codePoint.slice(2);

let letter;

let surrogate;

if (value < 0x10000) {

// This is not a surrogate pair. It's a code point that is in the basic

// multilingual plane.

surrogate = 'No '

letter = string[i];

} else {

// This code point is in a higher plane and involves a surrogate pair.

surrogate = 'Yes'

// We can't run `string[i]` as it would only get the high surrogate. Instead, slice

// out the entire codepoint.

letter = string.slice(i, i + 2);

// Skip the low surrogate. This is where UTF-16 is a _variable length encoding_.

i++;

}

results += `${codePoint} ${surrogate} ${letter}\n`

}

return results;

}

Now run this with some of the glyphs from above, but include some Latin1 characters as well.

getCodePointsTable("Glyphs: 𓃓𓆡𓆣𓂀𓅠");

==============================

Code Point Surrogates Letter

---------- ---------- ------

U+00_0047 No G

U+00_006c No l

U+00_0079 No y

U+00_0070 No p

U+00_0068 No h

U+00_0073 No s

U+00_003a No :

U+00_0020 No

U+01_30d3 Yes 𓃓

U+01_31a1 Yes 𓆡

U+01_31a3 Yes 𓆣

U+01_3080 Yes 𓂀

U+01_3160 Yes 𓅠

Now we can see what happens when characters are used outside of the basic multilingual plane, and the extra care that is needed to process and work with this text. This might seem overly complex for building a typical web app, but bugs will come up if a developer tries to do text processing naively. It’s possible to break the encoding and have improperly encoded UTF-16.

In fact. Let’s do that.

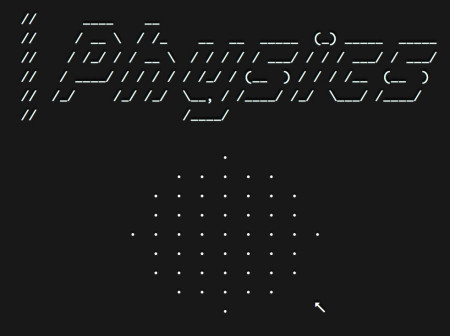

Broken Surrogate Pairs

I posted a tweet with a broken surrogate pair on Twitter. It turns out that Twitter did the right thing and stripped it out. However, I tried to do it again with a reply and putting an unmatched low surrogate mid-tweet. The second time the input visually notified me of my breakage, and would not let me send the tweet.

The browser is permissive in what it will display, and will show a glyph with the surrogate pair’s value when encountering an unmatched surrogate pair. This is because the DOMString is not technically UTF-16. It’s typically interpreted as UTF-16.

From MDN:

DOMString is a sequence of 16-bit unsigned integers, typically interpreted as UTF-16 code units.

Building a web page is a messy process. Servers and developers make mistakes all of the time. Having a hard error when an encoding issue in UTF-16 comes up is against the principles of displaying a webpage.

You can try this yourself:

var brokenString = "👍"[1] + "👍"[0];

var p = document.createElement("p");

p.innerText = brokenString;

document.body.appendChild(p);

Firefox, Safari, and Chrome each handle the surrogates a little bit differently. Firefox shows a glyph representing the hex value, Chrome shows the “replacement character”: (� U+FFFD), while Safari shows nothing at all. The DOMString on all three are still the broken UTF-16.

There are also other standards for UTF-16 that can deal with the realities of messy encodings. One such is a USVString which replaces the broken surrogates with the “replacement character”: (� U+FFFD). This turns the string into one that is then safe to process. Similarly there is WTF-8, or “Wobbly Text Format – 8-bit” which allows for encoding surrogate pairs into UTF-8.

One last thing

"👍🏽".length

// 4

At this point we’ve seen that the normal thumbs up emoji is made up of two code units. However, why is this single character 4?

getCodeUnitsTable("👍🏽")

===================================

Code Binary Letter

------ ------------------- ------

0xd83d 0b11011000_00111101 \ud83d

0xdc4d 0b11011100_01001101 \udc4d

0xd83c 0b11011000_00111100 \ud83c

0xdffd 0b11011111_11111101 \udffd

Looking at the code units, we can see that it’s made up of two pairs of surrogates.

getCodePointsTable("👍🏽");

==============================

Code Point Surrogates Letter

---------- ---------- ------

U+01_f44d Yes 👍

U+01_f3fd Yes 🏽

This last thumbs up example is a tease to show that there is more going on with how code points interact with each other.

What’s next?

So far we’ve covered imaginary text encodings, code points, the indexable UTF-32 encoding, and the slightly variable length UTF-16 encoding. There’s still plenty to cover with grapheme clusters, diacritical marks, and the oh so variable UTF-8 encoding. Stay tuned for the next part in this series.